Conducting concept testing without interviewing respondents is not going to replace conventional market research, but in the right circumstances it offers major benefits.

To be clear, this is still empirical research with customers and potential customers. Although AI enabled, it does not involve creating synthetic data. The key difference is, rather than addressing questions to respondents, we capture responses to questions they ask of each other on social media platforms.

AI now enables us to combine two groundbreaking capabilities. First, rather than just collating social listening data we can now sift, order and interrogate it.

Secondly, some AI tools can now ‘read’ images, extracting and analysing their characteristics - in this case ten designs of running.

These are real people participating within the last month. They are not answering questions put to them by an interviewer or moderator. They are interacting with one another, offering views and exchanging experiences. (Ironically this is what we aspire to in a successful focus group, as the participants spontaneously move from one topic to another, with only gently guidance required from the moderator).

This is a critical and fundamental difference between this approach and another recently launched service which measures responses to the visual concepts against the vendor’s AI ‘personas’ which are synthetically produced. By contrast this approach is based on data exclusively derived from real customers selected as users of brands in the market.

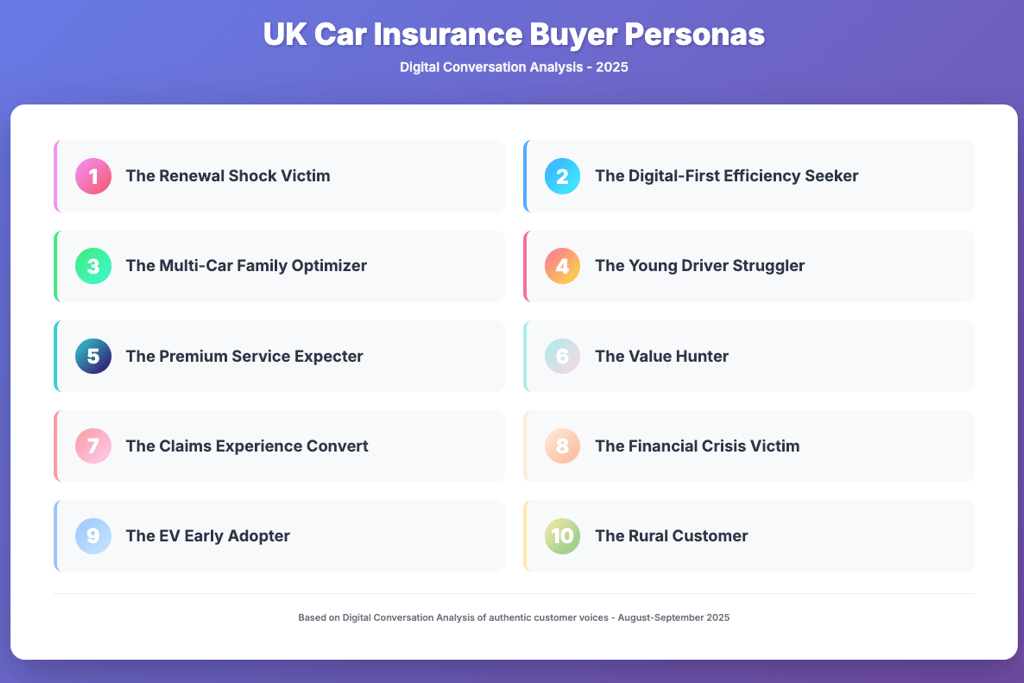

We can summarise the whole process, what I term Digital Conversation Analysis (DCA), in four stages.

Stage One involves extracting social listening data from selected platforms. You will need to decide where you will find active users of your product or service and where you can find good conversations. In the case of running shoe brands, Reddit has specialist forums where users exchange informed product preferences and relate their experiences of each brand.

Having collected the data, Stage Two involves the vital process of filtering out all promotional or influencer-based content. You will get a distorted view of the market if social listening is unfiltered. Promotional content can be minimal - for example Xiaohongshu (RedNote), Mumsnet and Trustpilot achieve around 100% organic content. At the other extreme we have Instagram where you are left with just 15%. Around the middle is X at around 36% organic content.

Stage Three involves asking the AI to analyse the data — in this case we were working with 3,395 organic conversations from running shoe buyers on Reddit and X.

At this point we can run through our chosen qualitative research procedures and compile content for a detailed report based on the wealth of data. For example, we can identify and rank the most successful brands based on based on sentiment. This, in itself, offers a valuable report on the market. It provides the backdrop to your assessment of design, packaging, advertising communications concepts.

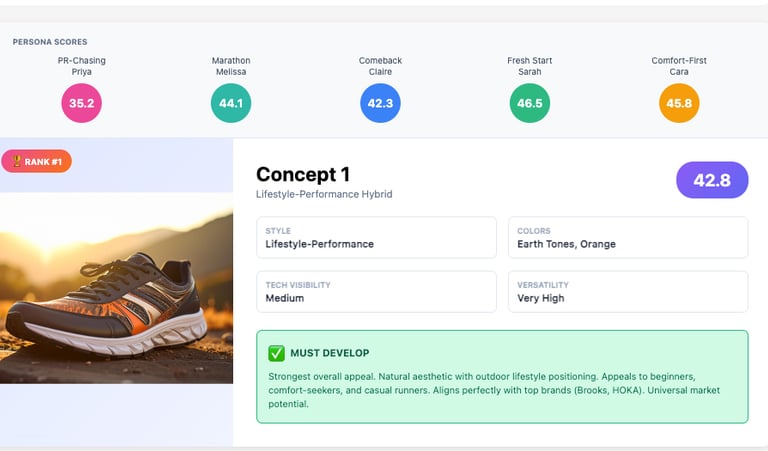

As part of this analysis, we ask the AI to create a set of personas, based on a complex set of data.

Each persona is anchored in the data and the objectives of your research.

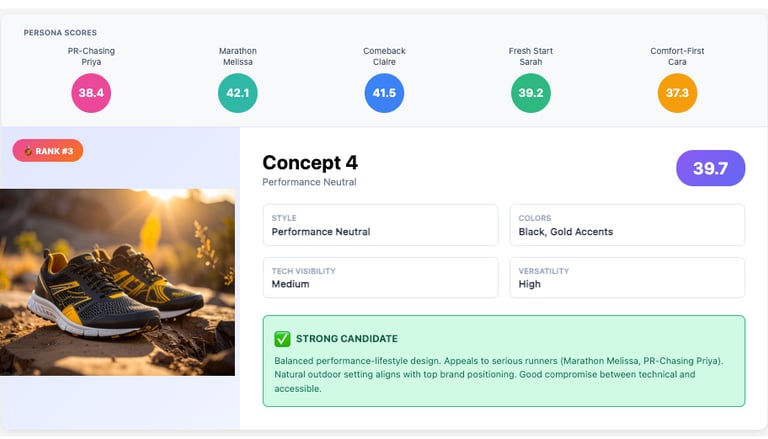

Here are examples of two personas and how they are matched with running shoes.

Performance Elite Runner

Profile: 25-35 Male, Expert level, £148 average spend, 89% positive sentiment

Key Interests: Performance, technology, comfort

Visual Match: Concept 2 (Bold Performance) & Concept 8 (Bold Statement)

Design Elements They Prefer:

Bold, aggressive colours (red, blue, high-contrast)

Technical mesh materials with visible tech elements

Chunky, engineered midsoles suggesting performance

Competition-ready, attention-grabbing aesthetics

Why It Works: Expert runners want visual cues that communicate serious performance capability and cutting-edge technology

Recreational Jogger

Profile: 30-40 Male, Intermediate level, £88 average spend, 95% positive sentiment

Key Interests: Comfort, style, cushioning, versatility

Visual Match: Concept 6 (Monochrome Minimalism) & Concept 9 (Urban Sophistication)

Design Elements They Prefer:

Clean, understated colour palettes (black, white, navy)

Versatile aesthetics that work for multiple activities

Professional appearance suitable for various settings

Balanced technical and lifestyle positioning

Why It Works: Need shoes that transition from exercise to daily life without looking overly athletic

The set of empirically-based personas is crucial for Stage Four which is conducting a visual analysis of the concepts - in this case ten running shoe images (created by AI).

The analysis draws on two sets of data. Verbal data on what people reveal about their preferences, attitudes and experiences with different running shoe brands.

Verbal Data

Demographics

Age ranges (20-30, 25-35, 35-45, 45-55, etc.)

Gender (Male/Female distribution patterns)

Location (geographic preferences and regional differences)

Running experience levels (Beginner, Intermediate, Advanced, Expert)

Behavioral Preferences

Platform activity patterns (Reddit discussion style vs. X engagement)

Content engagement types (technical discussions, product reviews, community support)

Purchase timing (impulse vs. research-driven buying)

Information seeking behaviour (detailed research vs. quick recommendations)

Running-Specific Attitudes

Performance orientation (PR-focused vs. casual fitness)

Technology adoption (early adopters vs. traditional preferences)

Health consciousness (injury prevention, recovery focus)

Environmental values (sustainability concerns, eco-friendly preferences)

Fashion consciousness (style importance vs. pure function)

Pain Points & Motivations

Injury recovery needs (plantar fasciitis, joint issues, rehabilitation)

Performance goals (speed improvement, distance achievements)

Comfort requirements (daily wear, long-distance comfort)

Budget considerations (value-seeking behaviour, premium willingness)

Lifestyle integration (work-to-gym versatility, urban use)

Buying Behaviour Patterns

Price sensitivity indicators (budget mentions, value comparisons)

Brand loyalty expressions (repeat purchases, brand advocacy)

Research depth (detailed technical discussions vs. quick decisions)

Influence sources (peer recommendations, professional advice, reviews)

Communication Style & Language

Technical vocabulary usage (running jargon, shoe technology terms)

Community engagement level (active participation vs. lurking)

Problem-sharing openness (injury discussions, performance challenges)

Recommendation patterns (specific model suggestions, general advice)

These characteristics are the aligned with the style of shoe being tested.

Visual Data

Colour Strategy Patterns:

Earth/Natural Palette: Concepts 1, 4, 10 use warm browns, golds, and natural tones

Bold Multi-Colour: Concepts 2, 3, 5 feature vibrant, attention-grabbing colour combinations

Monochrome/Neutral: Concepts 6, 7, 9 employ sophisticated blacks, whites, and greys

High-Contrast: Concept 8 uses dramatic black with red accents

Material Visual Cues:

Technical Performance: Mesh uppers with synthetic overlays (Concepts 2, 3, 5, 7)

Premium Positioning: Leather-look or sophisticated synthetic materials (Concepts 4, 9, 10)

Minimalist Clean: Smooth, understated material presentations (Concept 6)

Heritage Craft: Traditional material appearances suggesting quality (Concepts 1, 10)

Target Market Visual Signals:

Performance Athletes: Bold colours + technical materials + aggressive styling

Lifestyle Consumers: Gradient colours + clean lines + versatile aesthetics

Premium Buyers: Rich materials + sophisticated lighting + craftsmanship cues

Sustainability-Minded: Natural colours + organic styling + earth-friendly aesthetics

AI excels at managing, sorting and making sense of such a huge collection of observations.

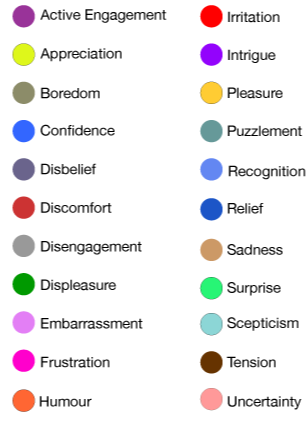

But we shouldn’t conclude that these results are only rational decision-making; that they neglect the emotional. The remarks made about running brands are assessed for their emotional force, so the sentiment results don’t just reflect how many positive or negative comments are made, but the intensity of how they are expressed.

To conclude, this is a procedure made possible by AI, enabling agencies or end clients who want to test concepts on their target market rigorously and cost-effectively, in a very short time. Moreover, clients have an invaluable dataset of recent customer data which they can return to running tests on, for example, product claims or taglines.

This data constitutes a detailed snapshot of the market which can be updated at regular intervals constituting a powerful brand tracking vehicle. Subsequent concept testing can be done on these updated results, keeping new concepts up-to-date with changes in the market.